To select a Python version in the pipeline, you need to have the required versions installed on the system.

Further actions were performed on CentOS 7 and the installation of binaries took place in the "/usr/bin/" directory for convenience, since the system already has versions "2.7" and "3.6" installed from the repository along this path.

Install dependencies:

yum install gcc openssl-devel bzip2-devel libffi-devel wget

Download the necessary sources of the necessary versions, in this case: "3.7", "3.8" and "3.9"

cd /usr/src

wget https://www.python.org/ftp/python/3.7.9/Python-3.7.9.tgz

wget https://www.python.org/ftp/python/3.8.9/Python-3.8.9.tgz

wget https://www.python.org/ftp/python/3.9.5/Python-3.9.5.tgz

Unzip:

tar xzf Python-3.7.9.tgz

tar xzf Python-3.8.9.tgz

tar xzf Python-3.9.5.tgz

Install:

cd Python-3.7.9

./configure --enable-optimizations --prefix=/usr

make altinstall

cd ../Python-3.8.9

./configure --enable-optimizations --prefix=/usr

make altinstall

cd ../Python-3.9.5

./configure --enable-optimizations --prefix=/usr

make altinstall

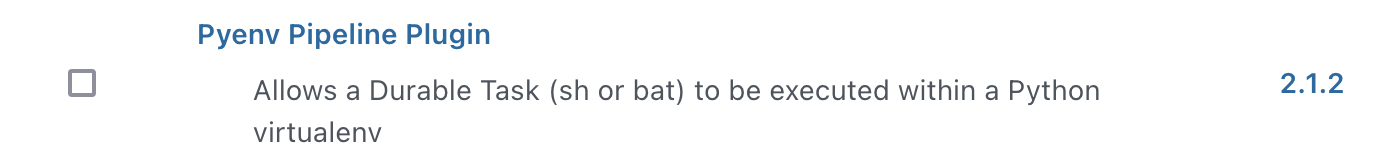

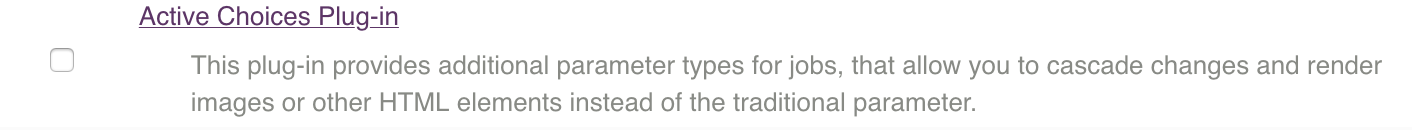

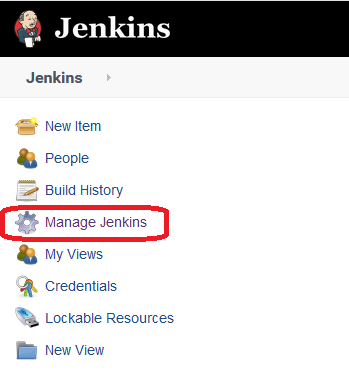

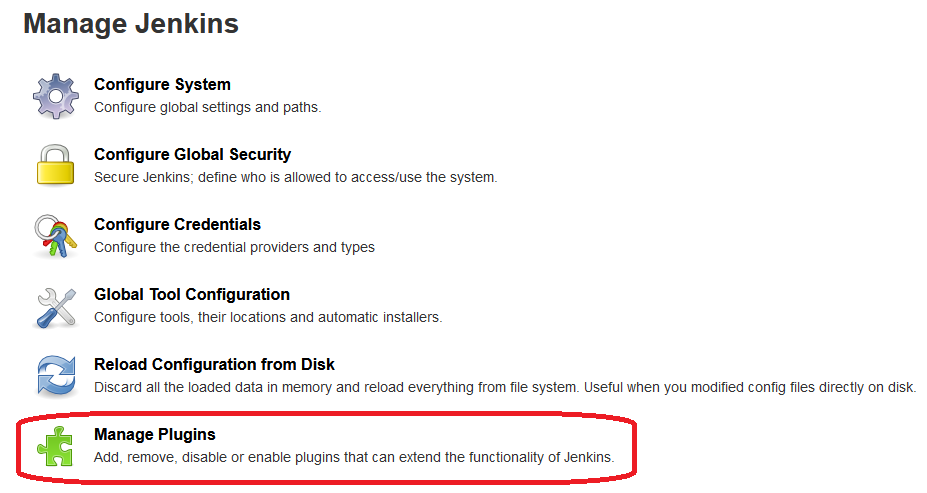

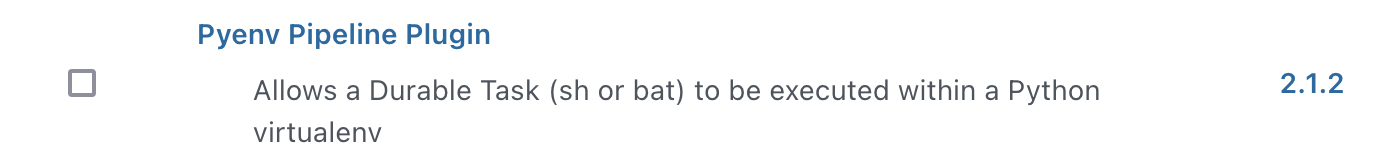

Now let’s install the plugin Pyenv Pipeline

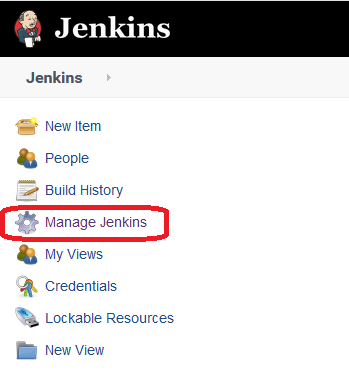

Go to Jenkins settings

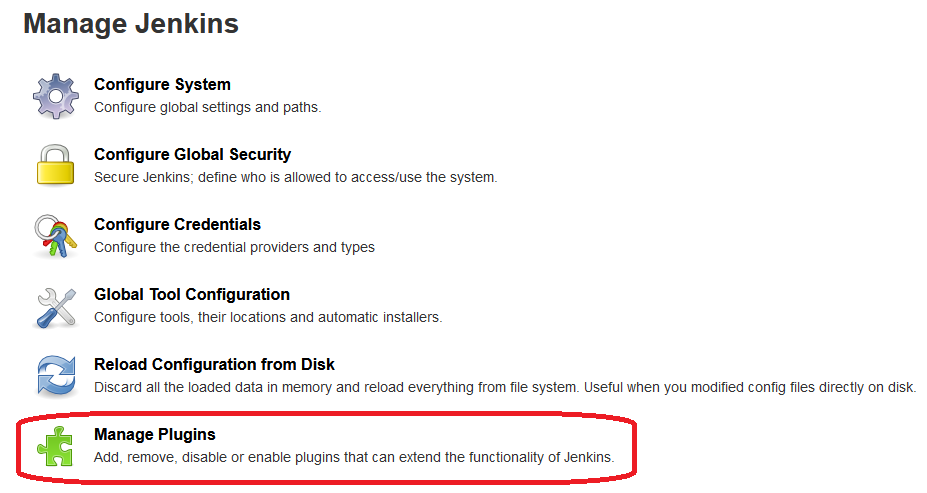

Section "Manage Plugins"

Go to the "Available" tab and in the search we specify "Pyenv Pipeline"

And install it.

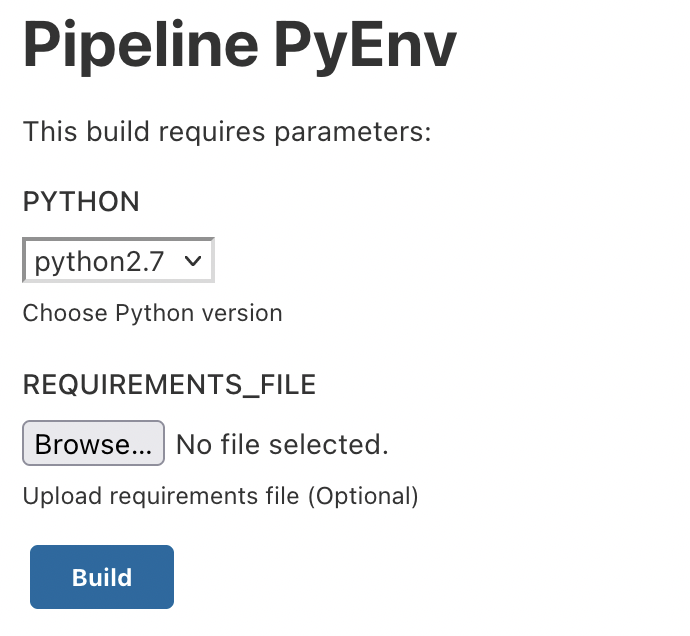

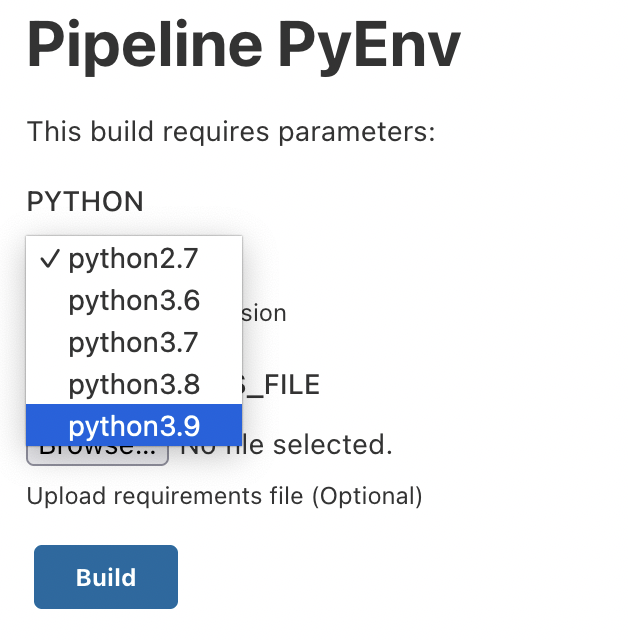

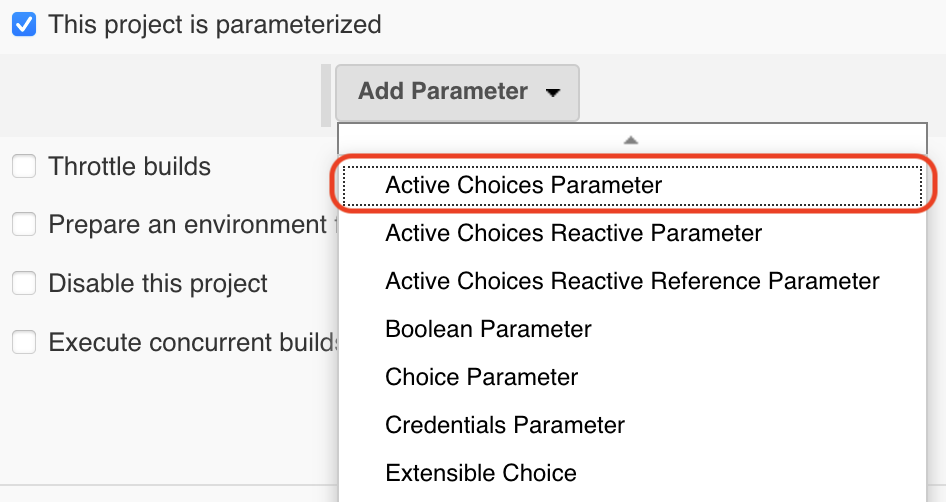

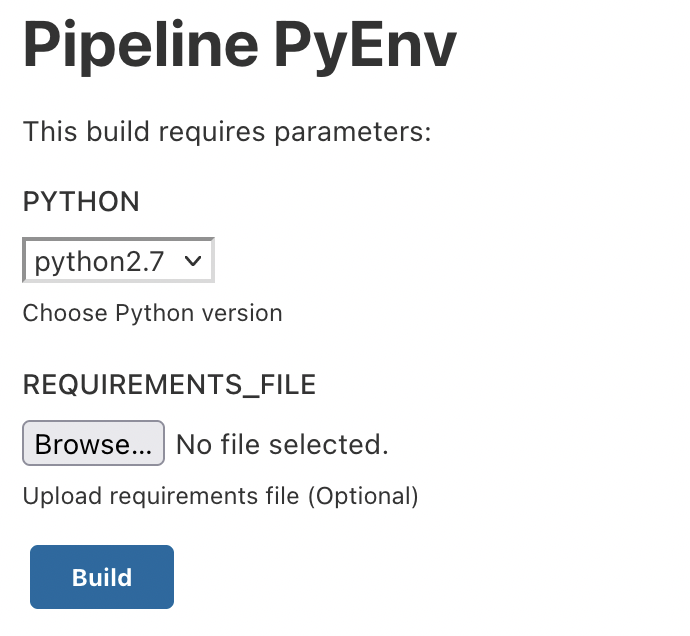

To select the version, we will use the "choice" parameter

Pipeline:

properties([

parameters([

choice(

name: 'PYTHON',

description: 'Choose Python version',

choices: ["python2.7", "python3.6", "python3.7", "python3.8", "python3.9"].join("\n")

),

base64File(

name: 'REQUIREMENTS_FILE',

description: 'Upload requirements file (Optional)'

)

])

])

pipeline {

agent any

options {

buildDiscarder(logRotator(numToKeepStr: '5'))

timeout(time: 60, unit:'MINUTES')

timestamps()

}

stages {

stage("Python"){

steps{

withPythonEnv("/usr/bin/${params.PYTHON}") {

script {

if ( env.REQUIREMENTS_FILE.isEmpty() ) {

sh "python --version"

sh "pip --version"

sh "echo Requirements file not set. Run Python without requirements file."

}

else {

sh "python --version"

sh "pip --version"

sh "echo Requirements file found. Run PIP install using requirements file."

withFileParameter('REQUIREMENTS_FILE') {

sh 'cat $REQUIREMENTS_FILE > requirements.txt'

}

sh "pip install -r requirements.txt"

}

}

}

}

}

}

}

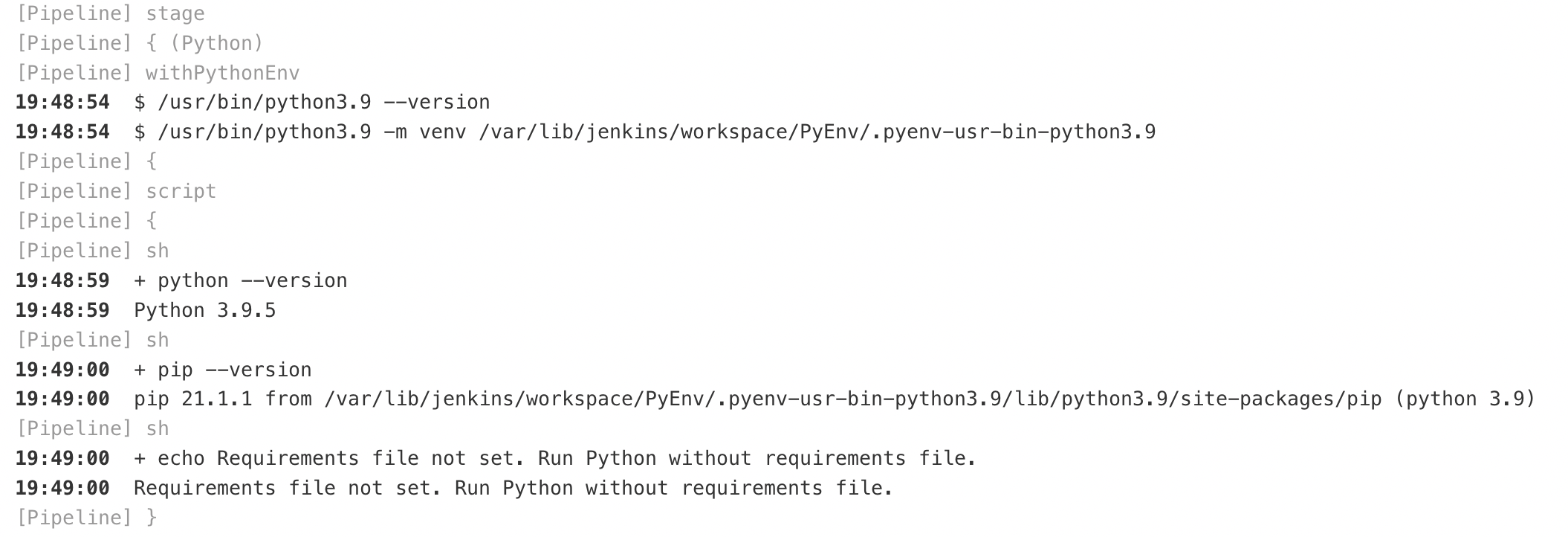

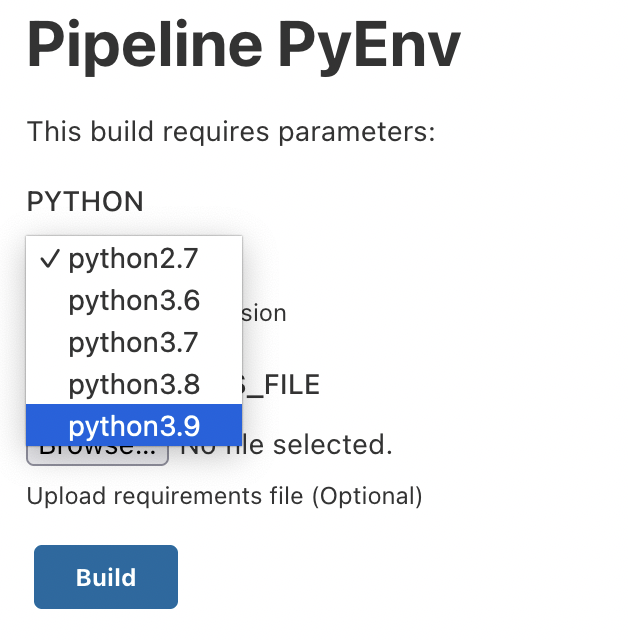

Let’s start the build:

Select the desired version, for example, "3.9" and run the build:

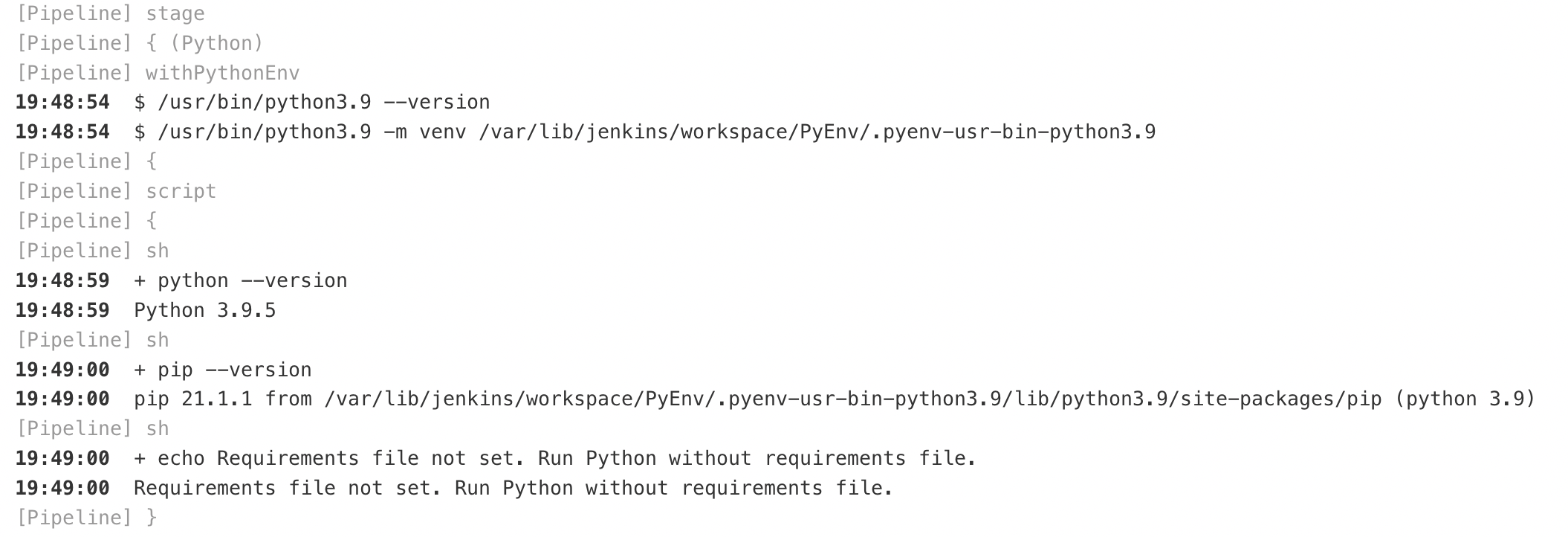

Let’s check the build log: